Introduction

We discussed logistic regression in a previous article. In this article we will explore how to do logistic regression the bayesian way using the rstanarm package in R.

The benefit of taking a bayesian approach is that it allows us to model the uncertainty in our parameter estimates directly.

Below we explore Bayesian logistic regression for explaining customer choices between two products, and run a simple scenario to determine how changing the price is likely to impact the probability of selection.

Simulating Data

We will use the same simulated data set as we did in part one, where 1 indicates a customer chose a smoothie and 0 indicates they chose a milkshake.

library(tidyverse)

library(statmod)

library(rstanarm)

options(mc.cores = 4)

InvLogit <- function(x) {

exp(x)/(1+exp(x))

}

set.seed(123)

Sex <- rbinom(1000, 1, 0.25)

Price <- rnorm(1000, 10, 3)

# Sunday to Saturday

Day <- sample(1:7, 1000, T) - 1

Income <- sample(seq(50, 150, by = 10), 1000, replace = T)

Raw <- InvLogit(((Day * .2) + (Price * -0.2) + (Sex * .02) + (Income * .02) + rnorm(1000, 0, 0.1)))

Data <- data.frame(cbind(Sex = Sex, Price = Price, Day = Day, Income = Income, Prob = Raw))

Data$Choice <- rbinom(1:1000, 1, Data$Prob)Bayesian Model

Rstanarm makes it very simple to run a bayesian binomial model. The only difference, assuming we are fine non-informative priors, is the function is stan_glm().

glm.stan <- stan_glm(Choice ~ Price + Day + Income,

family = "binomial", data = Data)

summary(glm.stan, probs = seq(0,1, by = .1))Model Info:

function: stan_glm

family: binomial [logit]

formula: Choice ~ Price + Day + Income

algorithm: sampling

sample: 4000 (posterior sample size)

priors: see help('prior_summary')

observations: 1000

predictors: 4

Estimates:

mean sd 0% 20% 40% 60% 80% 100%

(Intercept) -0.1 0.3 -1.3 -0.4 -0.2 0.0 0.2 1.1

Price -0.2 0.0 -0.3 -0.2 -0.2 -0.2 -0.2 -0.1

Day 0.2 0.0 0.0 0.2 0.2 0.2 0.2 0.4

Income 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

Fit Diagnostics:

mean sd 0% 20% 40% 60% 80% 100%

mean_PPD 0.6 0.0 0.6 0.6 0.6 0.6 0.6 0.7 We can also add a weakly informative prior to our model. This will increment the log probability mass function in Stan with the value of the prior which operates to constrain the estimate and can help with model fit.

We can set a weak normal prior on our predictors with the prior parameter. This would be more important if we had a smaller dataset, with 1,000 observations the prior will get washed out very quickly.

glm.stan <- stan_glm(

Choice ~ Price + Day + Income,

family = "binomial",

data = Data,

prior = normal(0, 1)

)

summary(glm.stan, probs = seq(0,1, by = .2))Model Info:

function: stan_glm

family: binomial [logit]

formula: Choice ~ Price + Day + Income

algorithm: sampling

sample: 4000 (posterior sample size)

priors: see help('prior_summary')

observations: 1000

predictors: 4

Estimates:

mean sd 0% 20% 40% 60% 80% 100%

(Intercept) -0.1 0.3 -1.3 -0.4 -0.2 0.0 0.2 1.4

Price -0.2 0.0 -0.3 -0.2 -0.2 -0.2 -0.2 -0.1

Day 0.2 0.0 0.1 0.2 0.2 0.2 0.2 0.3

Income 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

Fit Diagnostics:

mean sd 0% 20% 40% 60% 80% 100%

mean_PPD 0.6 0.0 0.6 0.6 0.6 0.6 0.6 0.7 In this case, the estimate of Day ends up being a little more stable after being constrained by the prior. In the previous article there were some issues with our right tail not being exactly normal, so this is a welcome development.

There are many priors available in rstanarm and they can be set on the predictors, covariance, or the intercept as required.

Diagnostics

We also want to check our Rhat values to ensure they are all at 1.0 as that indicates that the four chains have converged. Lack of convergence indicates problems in the model which will cause problems with estimation.

MCMC diagnostics

mcse Rhat n_eff

(Intercept) 0.0 1.0 3088

Price 0.0 1.0 2865

Day 0.0 1.0 1976

Income 0.0 1.0 3116

mean_PPD 0.0 1.0 1417

log-posterior 0.0 1.0 1288 Extracting Chains

Stan outputs a number of chains, the default is 4. These represent parameter estimates over 4,000 draws from simulation.

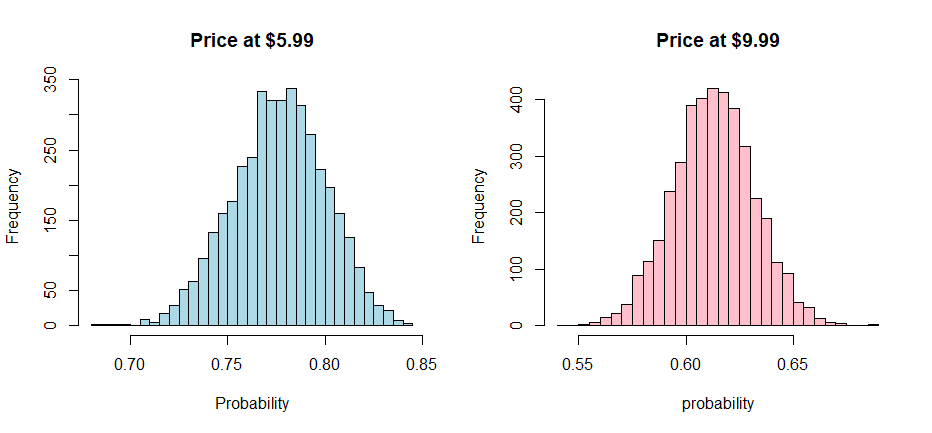

We will extract our chains into a dataframe and run two scenarios for different prices:

- Price $5.99, Wednesday (Day = 2), and 100K in customer income.

- Price $9.99, and otherwise the same as above.

Chains <- as.data.frame(glm.stan)

Chains

Distro <- InvLogit(Chains[1] + Chains[2] * 5.99 + Chains[3] * 2 + Chains[4] * 100)

colnames(Distro) <- "Choice"

Distro2 <- InvLogit(Chains[1] + Chains[2] * 9.99 + Chains[3] * 2 + Chains[4] * 100)

colnames(Distro2) <- "Choice" (Intercept) Price Day Income

1 -0.414007312 -0.1966041 0.27763770 0.02242109

2 0.206819685 -0.2040198 0.15188092 0.01985472

3 -0.222636608 -0.1765818 0.24442383 0.01889715

4 0.005384426 -0.2169745 0.18391246 0.02279556

5 -0.062183220 -0.1987167 0.19810591 0.02178055We can plot out histograms to give ourselves an understanding of the expected value and credible range of probability outcomes, given the two smoothie pricing scenarios.

par(mfrow = c(1,2))

hist(Distro$Choice, breaks = 50, col="lightblue",

xlab = "Probability", main = "Price at $5.99")

hist(Distro2$Choice, breaks = 50, col="pink",

xlab = "probability", main = "Price at $9.99")

Unsurprisingly, the probability of purchasing a smoothie is higher with a lower price. However, the benefit of this approach is that we can understand the range which can be helpful for sensitivity analysis.

The bayesian approach also shows us that these distributions do not overlap, the difference is large enough that we are unlikely to see the same results at $9.99 as we would at $5.99. In other words, the worse case $5.99 scenario is expected to exceed what $9.99 is capable of.

Differences

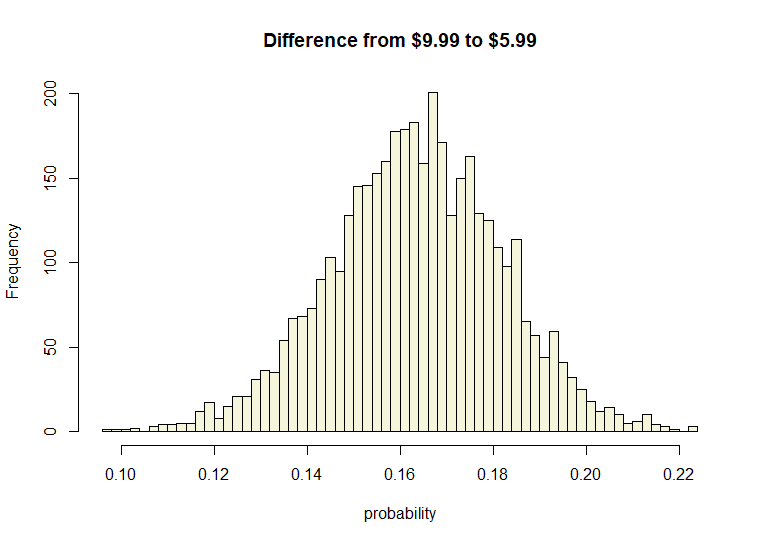

Since we have simulated outcomes to work with, we can also easily visualize the expected difference in range by simply subtracting one vector from the other.

Diff <- Distro - Distro2

par(mfrow = c(1,1))

hist(Diff$Choice, breaks = 50, col="beige", xlab = "probability",

main = "Difference from $9.99 to $5.99")

We can see that the expected difference is 0.16 over a range of 0.10 to 0.22 on the probability scale. This is much easier to work with and communicate than traditional methods.

The difference in means, and variance will be the same as in manual calculations. Note that VAR(X)-VAR(Y) is equal to the the covariance of differences.

mean(Distro$Choice) - mean(Distro2$Choice)

mean(Diff$Choice)

sqrt(cov(Distro$Choice - Distro2$Choice, Distro$Choice - Distro2$Choice))

sd(Diff$Choice)> mean(Distro$Choice) - mean(Distro2$Choice)

[1] 0.1634142

> mean(Diff$Choice)

[1] 0.1634142

> sqrt(cov(Distro$Choice - Distro2$Choice, Distro$Choice - Distro2$Choice))

[1] 0.01843171

> sd(Diff$Choice)

[1] 0.01843171The bayesian method is simpler and easier to work with than prediction confidence intervals. More importantly, it is easier to communicate.

Conclusion

Stan, the rstanarm package, and advances in computing power have made Bayesian data modeling incredibly convenient. This is a great approach for business intelligence where we may want to incorporate prior knowledge, and understand the bounds of risk and reward.

There are huge benefits to getting familiar with this approach, and rstanarm is a great way to start.

References

Matsurra, K. Bayesian Statistical Modeling with Stan, R, and Python. Springer, 2022.

Gelman, A; Carlin, J. et al. Bayesian Data Analysis, 3rd Ed. Chapman & Hall/CRC, 2013.

Recent Post

Excel Histograms

- 31 August 2025

- 3 min read

Combine Data in Excel with Power Query

- 31 July 2025

- 6 min read

Excel Radar Charts for Demographics

- 28 June 2025

- 3 min read

Writing Unstructured Data with Sink in R

- 25 May 2025

- 4 min read

Sales Lift Estimation with tools4uplift

- 30 April 2025

- 11 min read