Introduction

Transformations are changes to our response variable that can be employed to solve issues such as a lack of homogeneous variance, or to make interpretation easier as in the case of the log-log price elasticity model.

MLR3 Pipelines provides an automated way to handle transformations and where required also to transform back to the original scale during prediction.

Let’s explore a few ways to use transformations with the MLR3 package and mlr3pipelines in particular.

Dataset

We will use a freely available Kaggle dataset that lists car prices based on an assortment of features such as length, horsepower, and city mileage.

Preparing Data

First we will do some preparation on our dataset and create a regression task.

options(mc.cores = 4)

library(tidyverse)

library(mlr3verse)

library(mlr3pipelines)

CarPrices <- read.csv("CarSales.csv", stringsAsFactors = T)

CarPrices$curbweight <- NULL

CarPrices$symboling <- NULL

CarPrices$CarName <- NULL

CarPrices$car_ID <- NULL

CarMod <- mutate(

CarPrices,

cylindernumber = case_when(

cylindernumber == "one" ~ 1,

cylindernumber == "two" ~ 2,

cylindernumber == "three" ~ 3,

cylindernumber == "four" ~ 4,

cylindernumber == "five" ~ 5,

cylindernumber == "six" ~ 6,

cylindernumber == "seven" ~ 7,

cylindernumber == "eight" ~ 8,

cylindernumber == "twelve" ~ 12

)

)

PriceTask <- TaskRegr$new(

id = "PriceTask",

backend = CarMod,

target = "price"

)Create Learner

For this example we will create a single regression learning using glmnet.

GLearner = lrn("regr.glmnet",

id = "GlmModel",

s = to_tune(p_int(0, 15)),

alpha = to_tune(p_dbl(0, 1))

)Independent Variables

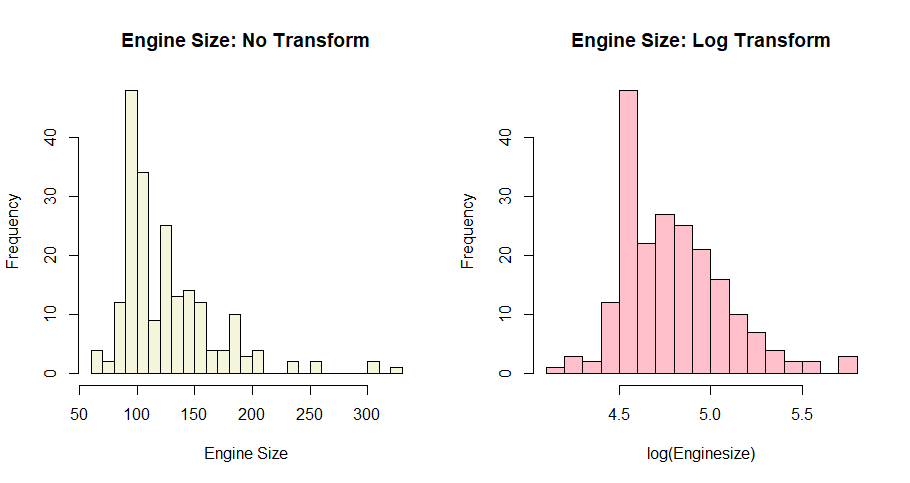

The engine size variable is not normally distributed, for a demonstration of the mutate functionality we will apply a log transformation. In this case it seems to help somewhat but there there is still a lot of density near 4.5 on the log scale.

Note that there are specific pipeline operators for common transformations such as Box-Cox or Yeo-Johnson, using these operators may be preferable where we don’t need the flexibility of a customized mutation function.

par(mfrow=c(1,2))

hist(CarMod$enginesize, breaks = 20, main = "Engine Size: No Transform",

xlab = "Engine Size", col="beige")

hist(log(CarMod$enginesize), breaks = 20, main = "Engine Size: Log Transform",

xlab = "log(Enginesize)", col = "pink")

Transformation Pipeline

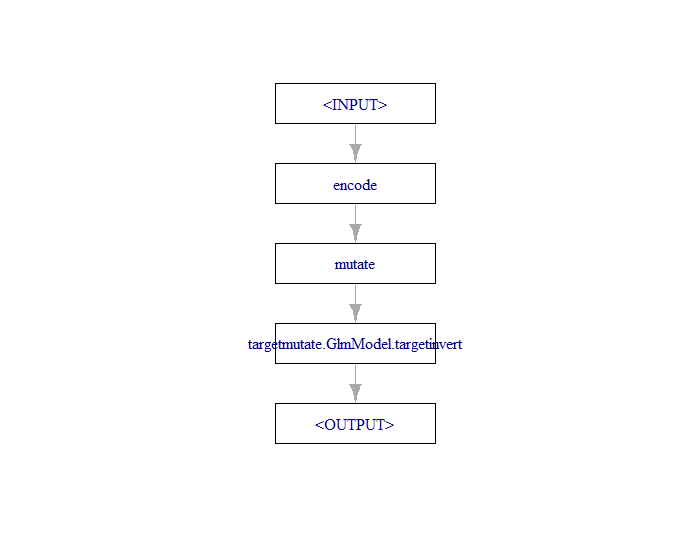

We’ll create a piece of pipeline that performs feature encoding of categorical features, then a log transformation on engine size, and finally a log transformation on the target.

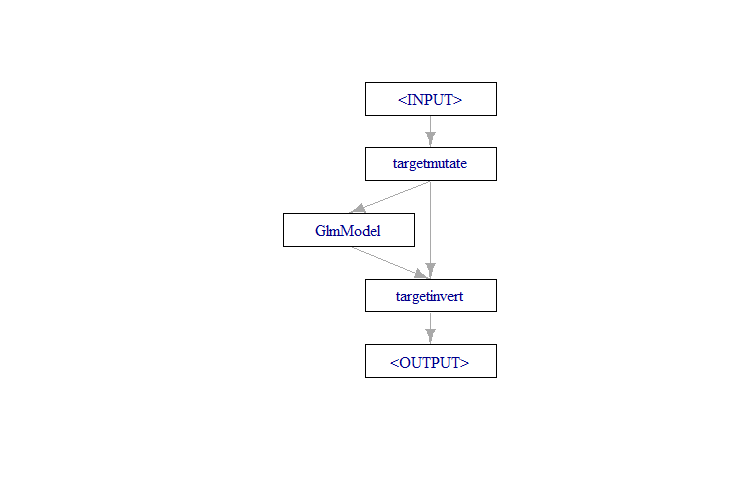

The final step uses a pre-built pipeline availabe in in mlr3 called pipeline_targettrafo which sets up the target transformation. The TRAFO module contains a mutation step that passes the transformed target to a learner along with an inversion function. From there the inverter will call that function to carry out the back transformation.

This can be created from scratch with the target mutate and target invert pipe operators but using the existing pipeline for a standard procedure saves time and avoids having to worry about which inputs are going into which output channels of the component operators.

The learner we are specifying below is simply a clone of the above glmnet model, including the same tuning tokens.

Note that while the manual suggests specifying the inverter function as a named list we found simply using exp(x) in the inverter function call correctly mapped it to the response variable as it does in the trafo function.

par(mfrow = c(1,1))

## Feature Encoder

Encoder <- po("encode")

## Mutation on Independent Vars

mutate.pipe <- po("mutate")

mutations = list(

PM = ~log(enginesize)

)

mutate.pipe$param_set$values$mutation = mutations

## Target TRAFO

tt <- pipeline_targettrafo(PipeOpLearner$new(GLearner$clone()))

tt$param_set$values$targetmutate.trafo = function(x) log(x)

tt$param_set$values$targetmutate.inverter = function(x) list(response = exp(x$response))

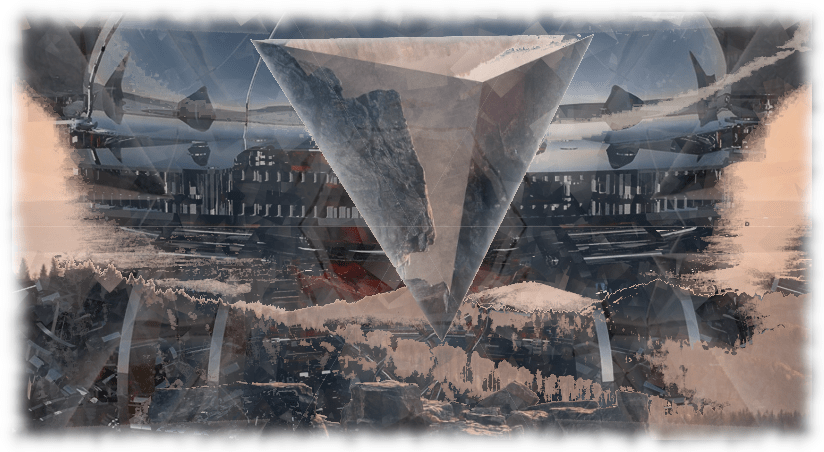

plot(tt)

Next we will build out the remainder of the transformation pipeline by first converting this section into an integrated learner with as_learner() and then using the pipeline graph constructor %>>% before plotting the result.

TrafoLearn <- as_learner(tt, id = "TRAFO")

graph <- Encoder %>>% mutate.pipe %>>% TrafoLearn

graph$plot()

Tuning the Pipeline

Once our mutations are set up we can tune our hyperparameters as usual. A more detailed explanation is available in another article Benchmarking Vehicle Prices with MLR3. However, essentially we are specifying a tuning strategy, in this case Bayesian optimization for 120 seconds using 3 fold cross validation.

The entire pipeline we created will be tuned over as a unified learner when hyper parameters are selected. In addition when we have new data for predictions the transformations will also be applied to the new data.

### Sampling Loop

resampling_inner = rsmp("holdout")

resampling_outer = rsmp("cv", folds = 3)

terminator = trm("run_time", secs = 120)

tuner = tnr("mbo")

measure = msr("regr.rmse")

Splits = partition(PriceTask, ratio = 0.9, stratify = T)

future::multisession(workers = 4)

set.seed(123)

instance = tune(

tuner = tuner,

task = PriceTask$filter(rows = Splits$train),

learner = FinalLearner,

resampling = rsmp("cv", folds = 3),

measures = measure,

terminator = terminator

)

instance$result_learner_param_vals

instance$resultTraining and Prediction

Once we have our tuning parameters we can train our model and see how well it does on the validation data.

FinalTuned = FinalLearner$clone()

FinalTuned$param_set$values = instance$result_learner_param_vals

FinalTuned$param_set$values

FinalTuned$train(PriceTask, row_ids = Splits$train)$model

ResultsEL <- FinalTuned$predict(PriceTask, row_ids = Splits$test)

sqrt(mean((ResultsEL$truth - ResultsEL$response)^2))

ResultsEL$response> sqrt(mean((ResultsEL$truth - ResultsEL$response)^2))

[1] 1953.374

> ResultsEL$response

[1] 10655.279 18972.114 6943.257 7702.912 9760.490 8187.215 9958.276 12557.192 10388.676 6906.860 7204.251 8714.980 9168.476 13263.504

[15] 10928.470 15843.457 15859.587 20448.614 19376.529 31221.496Our RMSE is 1,953 and our predictions have been back transformed as intended.

Conclusion

MLR3 is a flexible machine learning package, and it also provides plenty of flexibility to use transformations on both the target and feature variables. Before setting up your own target transformations be sure to check whether there are pre-built pipe operators such as PCA, scale, Box-Cox, and Yeo-Johnson.

Related Articles

References

Bischl, B.; Sonabend, R.; et al. Applied Machine Learning Using MLR3 in R, CRC / Chapman Hall, 2024.

Recent Post

Excel Histograms

- 31 August 2025

- 3 min read

Combine Data in Excel with Power Query

- 31 July 2025

- 6 min read

Excel Radar Charts for Demographics

- 28 June 2025

- 3 min read

Writing Unstructured Data with Sink in R

- 25 May 2025

- 4 min read

Sales Lift Estimation with tools4uplift

- 30 April 2025

- 11 min read