Introduction

Estimating sales uplift is difficult due to the impossibility of simultaneously observing a group of people exist in two different states at the same time.

There are simple strategies that attempt to calculate lift based on the sales difference relative to a historic average, but these simple methods have serious problems. For example, if long term sales are declining the promotion will appear less effective, even if the effect is large enough to detect in aggregate data.

There are better strategies for estimating uplift, this article focuses on the tools4uplift package in R which can aid our understanding of causal effects for binary outcome variables such as whether the customer bought a product, or whether year-over-year sales improved relative to the control group.

This package goes a step beyond average treatment effects and clusters groups of customers based on their propensity to respond to the promotion. This allows us to more precisely distinguish the customers who responded to a promotion from customers who would have bought the item anyway.

Tools4Uplift Package

For those interested in detailed mathematics the package authors have a paper available that goes into some detail. Note that the function names and operational details of the package have changed to make use of S3 classes since this paper was published, but it remains a great reference.

The package utilizes a dual uplift model which is essentially the difference between two logistic regression models.

- Outcome of interest Y{(1)} among people who have been treated.

- Outcome of interest Y{(1)} among people who are untreated.

The dual uplift models can include interaction terms or not. In addition, the package includes helpful tools for splitting data, quantizing continuous predictors, and evaluating models.

Example Data

Let’s generate some example data to explore that includes a positive lift for coupon, female gender, loyalty level (1-10), and prior sales.

options(scipen = 999)

library(tidyverse)

library(tools4uplift)

### Data Generation

set.seed(1234)

Customers <- data.frame(Customer = 1:1000)

Customers$Coupon <- rbinom(n = 1000, size = 1, prob = 0.5)

Customers$Gender <- ifelse(rbinom(n = 1000, size =1, prob = c(0.25, 0.75)), 1,0)

Customers$Loyal <- sample(1:10, 1000, replace = T)

Customers$PriorSales <- rnorm(1000, 1000, 300)

Customers$Prob <- pmin(0.1 + 0.5 * Customers$Coupon + 0.25 * Customers$Gender + 0.1 * Customers$Loyal + Customers$PriorSales * 0.0001, 0.95)

Customers$YoYSpend <- rbinom(n = 1000, size = 1, prob = Customers$Prob)

Customers$Prob <- NULL

Customers$Customer <- NULL

head(Customers)Once we have our data prepared it would be a good time to quantize any continuous variables prior to creating training and test splits. Tools4Uplift provides functions for splitting variables at points significant for uplift modeling. These will be discussed further below so we will leave things as they are for the time being.

Training and Test Splits

Now that we have some data we can split into training and testing splits. We will use a conventional 70/30 split. The package tools4uplift conveniently balances the splits by treatment and outcome, in this case “Coupon” and “YoYSpend.”

set.seed(1234)

MySplit <- SplitUplift(data = Customers, p = 0.7, group = c("Coupon", "YoYSpend"))

train <- MySplit[[1]]

valid <- MySplit[[2]]Baseline Model

We will first fit a baseline uplift model containing “PriorSales,” and store the fitted results. The summary outputs two models, one for the control population and another for the treatment population.

Base.mod <- DualUplift(data = train, treat = "Coupon",

outcome = "YoYSpend", predictors = "PriorSales")

summary(Base.mod)Next we will attach our fitted results to our original validation data and create a Performance Uplift Table object which we can use for visuals and validation. Since we have only 300 validation observations we will select five equal groups.

Base.fit <- cbind(valid, Pred = predict(Base.mod, valid))

Perf.base <- PerformanceUplift(data = Base.fit, "Coupon", "YoYSpend",

prediction = "Pred", nb.group = 5,

equal.intervals = T, rank.precision = 2)

Perf.base Targeted Population (%) Incremental Uplift (%) Observed Uplift (%)

[1,] 0.2 5.469530 25.14286

[2,] 0.4 7.954545 15.83710

[3,] 0.6 10.441263 11.16071

[4,] 0.8 14.539808 19.19192

[5,] 1.0 16.651841 12.55760Performance Table

The observed uplift is simply the proportion of treated people with the outcome of interest out of all treated people. Then subtracted from the proportion of untreated people with the outcome of interest out of all untreated people.

The incremental uplift is the difference in treated people with the outcome of interest less untreated people with the outcome of interest. Adjusted based on the ratio or proportion observed instances of treatment to instances of non-treatment. Then divided by the total number of treatments.

More concisely, incremental uplift the net number of outcomes of interest from two equalized groups divided by the total number of treatments.

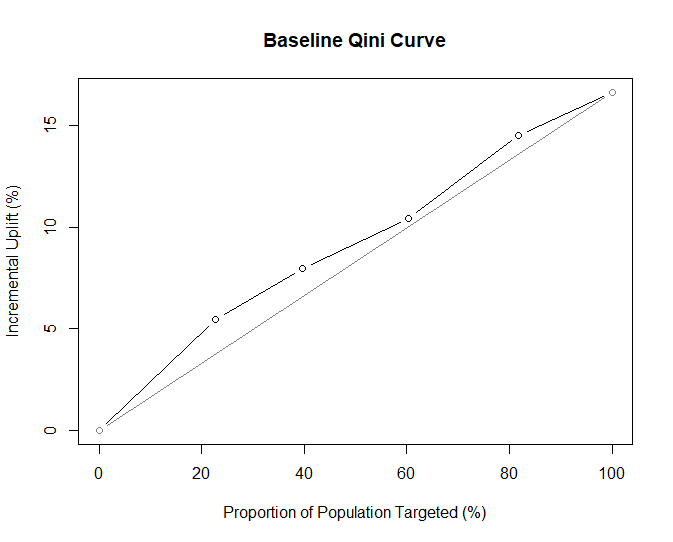

Qini Curve

A Qini curve as described in the authors’ paper is functionally similar to the ROC (Receiver Operating Characteristic) curve commonly used to evaluate classification models. The x-axis is the proportion of the targeted population, and the y-axis represents incremental uplift. There is a diagonal reference line that represents the expectation of randomly guessing uplift given the population proportion.

For example, with 100% of the population we would expect to guess uplift correctly 15% of the time, and that is the same as the incremental uplift for the population. At 20% of the targeted population the model clearly outperforms random guessing but still needs some major improvements to be usable.

Qini Area

Similar to ROC, the area under the curve is helpful in evaluating different uplift models. We can calculate this directly with the convenient QiniArea() function. This integral is also called the Qini coefficient.

QiniArea(Perf.base)

> QiniArea(Perf.base)

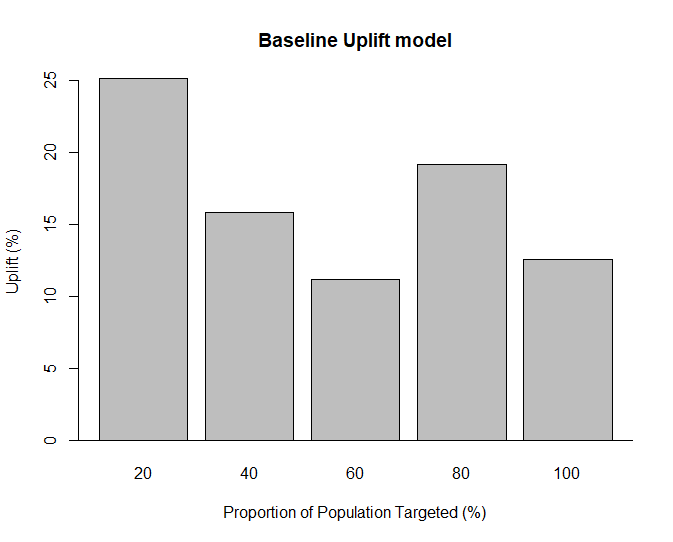

[1] 0.8522188Performance Barplot

Tools4uplift will also generate a Qini barplot with the barplot() function from tools4uplift. This is simply the observed uplift (%) from the performance table based on the proportion of the population targeted.

Ideally, an uplift model will be able to order observed uplift from highest to lowest which we can see is far from the case below for our validation data.

If the observed uplift appears unordered, as is the case here, it suggests instability in the model. This would mean that the people our model predicted to have the highest uplift (e.g. top 20%) do not actually have the most observed uplift and we should try a different model.

Further below we will see what the Qini barplot looks like in a better model.

barplot(Perf.base, main = "Baseline Uplift model")

Feature Selection

To improve our model we will need to select better features.

The tools4uplift package contains an algorithm for finding the best features with which to predict uplift. This relies on a lasso approach to selecting features that result in the lowest Qini coefficient and in theory the best model.

This is very helpful because given even a modest number of features the number of possible interactions with the treatment variable can grow extremely large and ignoring relevant interactions can cause issues with causal models. To draw a causal conclusion, which is what uplift implies, we need to have a saturated model or a sufficient set of confounders such that we can draw a causal inference from our treatment variable.

Although it is advisable to scale or normalize data before using regularized logistic regression, for the sake of simplicity we will keep our variables as they are.

features <- BestFeatures(data = train, treat = "Coupon", outcome = "YoYSpend",

predictors = colnames(Customers[,2:4]),

equal.intervals = TRUE, nb.group = 5,

validation = FALSE)

print(features)> features

[1] "Coupon" "Gender" "Loyal" "PriorSales"

attr(,"class")

[1] "BestFeaturesThe best features recommended are Gender, Loyal, and PriorSales. Notably, there are no interaction terms suggested. This is not surprising since we did not program any interactions into our test data. However, if that is not the case, there is a related function called interUplift() which will run an uplift model based on interactions that allows us to specify “all” for a saturated model, or “best” for automated Qini feature selection as a parameter.

Full Model

Although we would acheive the same result with interUplift() we can also specify the features, ignoring “Coupon,” as predictors in the DualUplift() function for our training dataset.

### DualUplift

Uplift.mod <- DualUplift(data = train, treat = "Coupon", outcome = "YoYSpend", predictors = features[2:4])

summary(Uplift.mod)

### Alternative for Automatic features / Interactions

Inter.mod <- InterUplift(data = train, treat = "Coupon", outcome = "YoYSpend", predictors = colnames(Customers[,2:4]), input = "best")

summary(Inter.mod)Full Model Evaluation

Let’s evaluate our full model to see if it performs better than the baseline model.

Full.fit <- cbind(valid, Pred = predict(Uplift.mod, valid))

Perf.full <- PerformanceUplift(data = Full.fit, "Coupon", "YoYSpend",

prediction = "Pred", nb.group = 5,

equal.intervals = T, rank.precision = 2)

Perf.full

QiniArea(Perf.full)

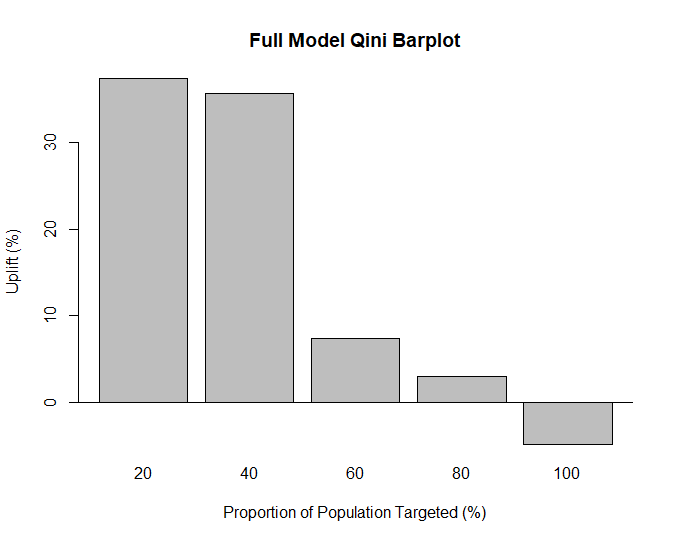

barplot(Perf.full)> QiniArea(Perf.full)

[1] 4.620119 Targeted Population (%) Incremental Uplift (%) Observed Uplift (%)

[1,] 0.2 6.867375 37.373737

[2,] 0.4 14.658293 35.714286

[3,] 0.6 17.266824 7.407407

[4,] 0.8 16.727487 3.030303

[5,] 1.0 16.651841 -4.823529

Model Performance

This model looks significantly better than our first attempt, it manages to correctly order observations based on predicted uplift.

This result illustrates why we would want to model uplift this way. 20% of our population has a large observed uplift, but considering our entire population the observed uplift is negative. In other words, the promotion was effective for some, but not all, customers, and that is critical information for marketers looking to improve their targeting.

As a secondary consideration, it may not be obvious looking at the overall data that the promotion is having a positive impact on subsets of customers resulting in a business throwing the proverbial baby out with the bathwater instead of looking for ways to target more effectively.

The Qini Area is much larger for our full model (4.6) which indicates this model as it fits the validation data better.

Note that the total amount of incremental uplift is unchanged for both models, the key difference is that the second model does a better job at predicting uplift.

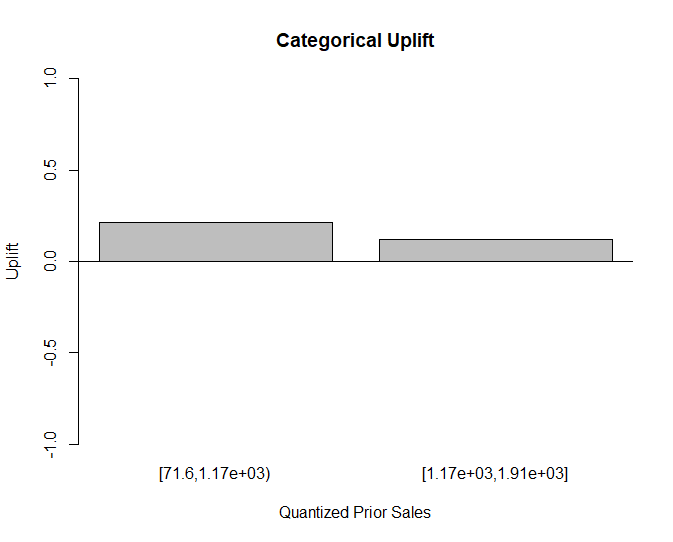

Category Uplift

Suppose we are interested in testing a model with a continuous variable quantized to discrete values. The tools4uplift package makes this process simple with the BinUplift() function, and there is a related function for bi-variate continuous variables (interactions).

This function allows us to select a variable and a level of significance for splitting based on ability to predict uplift.

CatBin.mod <- BinUplift(Customers, treat = "Coupon", outcome = "YoYSpend", x = "PriorSales", alpha = 0.05)

CatData <- cbind(Customers, QuantizedPriorSales = predict(CatBin.mod, Customers$PriorSales))Plotting Categorical Uplift

We can plot out categorical uplift with the UpliftPerCat() function which provides a helpful view of uplift based on the split points of the continuous variable.

UpliftPerCat(data = CatData, treat = "Coupon", outcome = "YoYSpend",

x = "QuantizedPriorSales", xlab='Quantized Prior Sales', ylab='Uplift', ylim=c(-1,1), main = "Categorical Uplift")[1] "The variable PriorSales has been cut at:"

[1] 1167.413

Once variables are descretized the process is identical to the above examples except that we discard the prior continuous variables. To spice things up we will use a saturated interaction model with InterUplift() by specifying input = “all” along with the new categorical variable we have created.

set.seed(1234)

MySplit <- SplitUplift(data = CatData, p = 0.7, group = c("Coupon", "YoYSpend"))

train <- MySplit[[1]]

valid <- MySplit[[2]]

Inter.mod <- InterUplift(data = train, treat = "Coupon", outcome = "YoYSpend", predictors = colnames(valid[,c(2:3,6)]), input = "all")

summary(Inter.mod)

Sat.data <- cbind(valid, Pred = predict(Inter.mod, valid, treat = "Coupon"))

Sat.Perf <- PerformanceUplift(data = Sat.data, "Coupon", "YoYSpend",

prediction = "Pred", nb.group = 5,

equal.intervals = T, rank.precision = 2)

print(Sat.Perf) Targeted Population (%) Incremental Uplift (%) Observed Uplift (%)

[1,] 0.2 8.095238 42.988506

[2,] 0.4 12.739641 27.272727

[3,] 0.6 17.215343 8.695652

[4,] 0.8 16.712235 3.571429

[5,] 1.0 16.651841 -1.858108Again, we will validate the goodness of fit with the Qini coefficient.

QiniArea(Sat.Perf)

> QiniArea(Sat.Perf)

[1] 4.470437Although the saturated model with all terms and interactions does respectable, the best model is the one recommended by BestFeatures(), this makes sense since we did not program any interactions into our example dataset.

Conclusion

Uplift is difficult to measure even with randomized groups, and basic business methods are unfortunately not well suited to causal inference even for simple problems. Moreover, typical measures such as average treatment effects, although an improvement, lack nuance to distinguish between the impact of the treatment on particular individuals.

The tools4uplift package is a great way to quickly explore different uplift models, identify important features, and more importantly identify customer groups who benefit from promotion, and customers who do not.

References

Brumback, B. Fundamentals of Causal Inference with R; CRC / Chapman Hall, 2022.

Belbahri, M.; Murua, A.; et. al. Uplift Regression: The R Package Tools4Uplift.

Recent Post

Excel Histograms

- 31 August 2025

- 3 min read

Combine Data in Excel with Power Query

- 31 July 2025

- 6 min read

Excel Radar Charts for Demographics

- 28 June 2025

- 3 min read

Writing Unstructured Data with Sink in R

- 25 May 2025

- 4 min read

Sales Lift Estimation with tools4uplift

- 30 April 2025

- 11 min read