Business Insights

Measuring sales lift is challenging, especially when it comes to customers. A product can’t be both on-sale and regular price at the same time. Similarly, customers can’t redeem and offer, and also not redeem an offer.

There are some heuristics used to calculate lift such as taking the 52 week average of non-promotional weeks as a baseline. However, this rule of thumb is not ideal because it assumes that there is no seasonality or business growth. For example, in a situation of negative growth, this approach will under estimate the value of a recent promotion.

In order to reliably estimate promotional lift we need to look to methods of causal inference. These methods look to create a counter-factual estimate of what the world would have looked like had we not run the promotion.

Approaches to Counter-Factuals

One approach is to use “like products” as a reference, or “like stores” that did not run the promotion. However, this can be cumbersome and it requires meeting strict assumptions. A more flexible option is BART (Bayesian Additive Regression Trees) for causal inference.

BART dispenses with the requirement to find lookalikes with a parallel trends, and does not require a pre-period, but it does require identifying relevant confounding variables and some care in constructing an artificial control group to get the best results.

Since BART does not depend on lookalike products, and provides credible estimation ranges, it is also a technique that lends itself to well automated reporting of results.

Let’s explore using BART to measure the benefit of a promotional period.

Results

We conclude that the average daily sales lift for a particular promotion over its active period was $40.

Follow along below in R Studio to see how we arrived at this result.

BART

BART is similar to random forest models such as gradient boosting, except that a Bayesian prior is used to constrain the individual trees to be weak learners.

The model uses an additive sum of tiny trees to approximate a function, since BART is multivariate it is able to naturally capture interactions between variables.

BART Model

We will use the bartCause package in R which is a package designed for causal analysis with BART models.

This model requires three inputs:

- Response Variable: Continuous or binary dependent variable we are interested in.

- Treatment Variable: A binary treatment indicator.

- Confounders: Regression coefficients we intend to condition on.

It is possible to use a formula and pass a data argument or to separate out the inputs and specify them individually in the bartc function.

Explore Data

First let’s explore the dataset, the example data is available at end of this article.

We have a date index, sales number, retail price, day of the week, and a binary promotional indicator.

head(SalesData) TrsDate Sales RetailPrice WeekDay Promo

1 1 247.05 5.48 5 0

2 2 278.99 5.60 6 0

3 3 362.34 5.58 7 0

4 4 384.30 5.56 1 0

5 5 477.63 5.64 2 0

6 6 345.87 5.62 3 0Variable Overlap

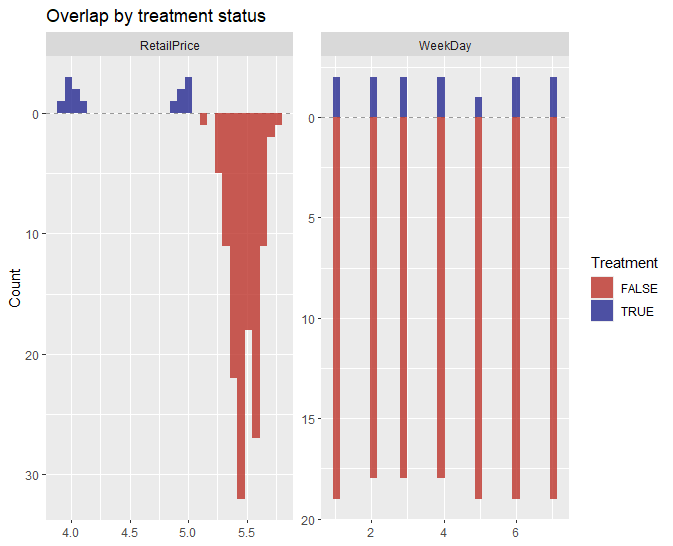

Before fitting it can be a good idea to check the feature overlap between our treatment and control populations. In this case those are days and retail prices.

plot_overlap_vars(

.data = SalesData,

treatment = "Promo",

confounders = confounders

)

These charts look a bit strange because the number of control days is larger the number of treatment days but all weekdays are covered in both sets with approximately even proportions.

Retail pricing is another matter. As it happens, low prices only exist when items are being promoted so there are poor matches between groups on that dimension. This fact does make it more difficult to differentiate a causal effect viz-a-viz promotion without pricing.

Ideally, we would have examples of non-promoted low price days but since we do not, we will make do with what is available. BART will take price into consideration when fitting the response even if our control doesn’t have perfect representation across prices.

Model Fitting

We will pass Sales, Promo (our treatment indicator), and a matrix of confounders to the function bartc.

It is very important to identify the appropriate confounders to condition on in order to make causal inference reliable. For example, if weekday is relevant and we fail to condition on it then our estimate will be less accurate.

Let’s fit the model and discuss some of the other parameters below.

library(bartCause)

library(plotBart)

library(tidyverse)

confounders <- c("RetailPrice", "WeekDay")

mybart <-bartc(

response = SalesData$Sales,

treatment = SalesData$Promo,

confounders = as.matrix(SalesData[, confounders]),

method.rsp = "bart",

method.trt = "bart",

commonSup.rule = "none",

keepTrees = T,

n.samples = 1000

)Propensity Scores

This type of causal inference requires a plausible control group that matches the treatment group in order to get the best results. Imagine a medical study with 15 males who receive a treatment, in that case a group of 15 females would not be a good choice for the control.

For this reason bartCause has a number of parameters to handle matching between control and treatment groups. While assigning men and women is fairly simple, assignments become a difficult problem as dimensionality increases. A method known as propensity scoring can help us understand the composition of our treatment and control groups.

Propensity scores assist with control group boundaries, so we can ensure a similar makeup of treatment and control members. The three parameters below allow for control over this process, and it is also possible to manually assign propensity scores using other methods.

- method.trt specifies the methodology for propensity score calculation, options are “bart,” “glm” which fits a logistic classifier, and “none” which skips calculating propensity scores.

- method.rsp specifies the response model fit which can be the default “bart” for an average response, “p.weight” for weighting by propensity scores, or “tmle” (targeted minimum loss) which is the same as weighting but with an additional adjustment to hone in on causal effects.

- commonSup.rule is for excluding members from a group where they are not a good match based on propensity scoring. Alternatively, we can specify “none” to keep all members despite their score.

Other Parameters

The keepTrees function keeps the tree data which can be used for refitting and may be necessary for some functions available in the plotBart package for graphing results. If you don’t need it, this can be set to false.

Finally, n.samples determines the number of MCMC samples to take in each of the 10 default chains. The default for samples is 500, which is fine for testing but may be insufficient for final analysis depending on the data.

There are a lot of options available in this package, and the reference manual is worth a read.

Summary Statistics

Let’s run some summary statistics on our new model.

summary(mybart)Call: bartc(response = SalesData$Sales, treatment = SalesData$Promo,

confounders = as.matrix(SalesData[, confounders]), method.rsp = "bart",

method.trt = "bart", commonSup.rule = "none", keepTrees = T,

n.samples = 1000)

Causal inference model fit by:

model.rsp: bart

model.trt: bart

Treatment effect (population average):

estimate sd ci.lower ci.upper

ate 40.04 53.4 -64.61 144.7

Estimates fit from 143 total observations

95% credible interval calculated by: normal approximation

population TE approximated by: posterior predictive distribution

Result based on 1000 posterior samples times 10 chains

> Average Treatment Effect

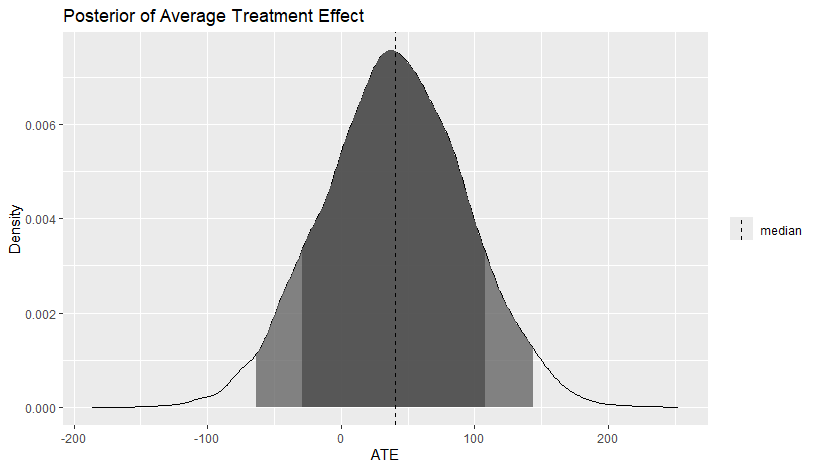

The summary statistics indicate that our Average Treatment Effect (ATE) is $40, and the 95% credible interval is between -$65 and $145. The standard deviation is about $53.

As its name suggests, the ATE calculates the difference between the average effect on the treatment group, and the average effect on the control group. This is the causal effect we are interested in. In this case the average effect over all promotional days is $40, although there appears to be quite a lot of daily variation despite conditioning on WeekDay.

Note that it is possible to specify ATT (Average Effect on Treatment) or ATC (Average Effect on Control) in the estimand parameter. That is if we want to understand the estimated average effect on either group in isolation.

Plotting Results

Although there are options in the main package, we can get some nice plots from the plotBart helper package.

plot_PATE(

.model = mybart,

type = "density",

ci_80 = T,

ci_95 = T,

.median = T

)

Posterior Effects

For our sales data, the average effect is above zero and while it is clear that not all days benefited from this promotion, the average effect over all days is, on balance, a $40 improvement.

We might be tempted to conclude the promotion was successful based on the point estimate, but we can see that after incorporating uncertainty into the parameter estimates, it is possible that the ATE is zero or less. If it is less than zero, the the promotion didn’t do anything except cost money.

The above chart suggests it is very likely the promotion did provide positive benefit; or looking at it another way, if we ran this promotion many times we would expect the ATE to be positive the vast majority of times.

This type of analysis is the hallmark of Bayesian approaches, in that it allows us to not only report a point estimate, but also speak directly to risk and uncertainty.

Conclusion

Bayesian Additive Regression Trees can help make calculating causal inference on promotional lift easier to solve. In some situations BART may be the only option, such as where we do not have past data.

Provided we pay attention to group makeup and conditioning BART provides a powerful technique for evaluating our promotional spend and is well suited for automated workflows.

References

Chipman H.; George, E.; McCulloch, R. BART: Bayesian Additive Regression Trees. The Annals of Applied Statistics 2010, Vol.4, No.1, 266-298.

Ebrahim Valojerdi A, Janani L. A brief guide to propensity score analysis. Med J Islam Repub Iran. 2018 (7 Dec);32:122. https://doi.org/10.14196/mjiri.32.122

Diaz, I. Machine learning in the estimation of causal effects: targeted minimum loss-based estimation and double/debiased machine learning. Biostatistics, Volume 21, Issue 2, April 2020, Pages 353–358, https://doi.org/10.1093/biostatistics/kxz042

Example Data

This data can be loaded into R to follow along with the above example.

SalesData <- structure(list(TrsDate = 1:143, Sales = c(247.05, 278.99, 362.34,

384.3, 477.63, 345.87, 274.5, 296.46, 334.89, 400.77, 521.55,

351.36, 203.13, 197.64, 230.58, 263.52, 362.34, 334.89, 466.65,

219.6, 247.05, 258.03, 290.97, 290.97, 527.04, 389.79, 334.89,

241.56, 201.15, 299.97, 312.93, 329.4, 296.46, 274.5, 367.83,

206.62, 247.05, 340.38, 461.16, 329.4, 285.48, 274.5, 225.09,

170.19, 225.09, 340.38, 340.38, 186.66, 285.48, 340.38, 296.46,

323.91, 428.22, 192.15, 334.89, 334.89, 269.01, 284.98, 351.36,

400.77, 318.42, 219.6, 263.52, 257.05, 323.91, 312.93, 488.61,

271.51, 329.4, 263.52, 269.01, 318.42, 274.5, 307.44, 378.81,

318.42, 269.01, 251.04, 258.03, 323.91, 422.73, 296.46, 263.52,

285.48, 334.89, 356.85, 356.85, 505.08, 312.93, 266.26, 279.99,

274.5, 345.87, 323.91, 378.7, 384.3, 307.44, 285.48, 285.48,

345.87, 329.4, 502.08, 389.79, 312.93, 230.58, 306.94, 312.93,

285.48, 285.48, 345.37, 312.93, 258.03, 236.07, 236.07, 263.52,

285.48, 299.46, 76.86, 98.82, 263.87, 424.55, 474.45, 524.35,

454.49, 374.65, 451.48, 458.98, 405.49, 510.95, 428.94, 447.45,

403.45, 251.05, 274.5, 323.91, 340.38, 296.46, 323.91, 269.01,

258.03, 274.5, 181.17, 219.6), RetailPrice = c(5.48, 5.6, 5.58,

5.56, 5.64, 5.62, 5.45, 5.23, 5.36, 5.28, 5.49, 5.47, 5.52, 5.4,

5.45, 5.56, 5.45, 5.43, 5.58, 5.52, 5.49, 5.56, 5.44, 5.56, 5.45,

5.51, 5.41, 5.31, 5.71, 5.48, 5.59, 5.56, 5.46, 5.39, 5.55, 5.31,

5.73, 5.37, 5.41, 5.41, 5.48, 5.5, 5.56, 5.32, 5.57, 5.55, 5.44,

5.35, 5.43, 5.39, 5.5, 5.41, 5.41, 5.54, 5.4, 5.54, 5.34, 5.55,

5.31, 5.37, 5.58, 5.52, 5.66, 5.53, 5.44, 5.61, 5.39, 5.49, 5.56,

5.5, 5.38, 5.48, 5.64, 5.55, 5.39, 5.43, 5.64, 5.67, 5.23, 5.64,

5.16, 5.58, 5.35, 5.63, 5.27, 5.49, 5.35, 5.64, 5.44, 5.45, 5.48,

5.37, 5.53, 5.58, 5.51, 5.57, 5.47, 5.44, 5.36, 5.27, 5.48, 5.33,

5.46, 5.42, 5.52, 5.41, 5.66, 5.44, 5.42, 5.55, 5.4, 5.62, 5.3,

5.41, 5.42, 5.58, 5.61, 5.43, 5.43, 5.01, 4.97, 5.02, 5.03, 4.91,

4.85, 3.95, 4.04, 4.13, 3.91, 4.04, 3.96, 3.97, 5.54, 5.55, 5.44,

5.77, 5.58, 5.44, 5.47, 5.41, 5.34, 5.59, 5.38), WeekDay = c(5L,

6L, 7L, 1L, 2L, 3L, 4L, 5L, 6L, 7L, 1L, 2L, 3L, 4L, 5L, 6L, 7L,

1L, 2L, 3L, 4L, 5L, 6L, 7L, 1L, 2L, 3L, 4L, 5L, 6L, 7L, 1L, 2L,

3L, 4L, 5L, 6L, 7L, 1L, 2L, 3L, 4L, 5L, 6L, 7L, 1L, 2L, 3L, 4L,

5L, 6L, 7L, 1L, 2L, 3L, 4L, 5L, 6L, 7L, 1L, 2L, 3L, 4L, 5L, 6L,

7L, 1L, 2L, 3L, 4L, 5L, 6L, 7L, 1L, 2L, 3L, 4L, 5L, 6L, 7L, 1L,

2L, 3L, 4L, 5L, 6L, 7L, 1L, 2L, 3L, 4L, 5L, 6L, 7L, 1L, 2L, 3L,

4L, 5L, 6L, 7L, 1L, 2L, 3L, 4L, 5L, 6L, 7L, 1L, 2L, 3L, 4L, 5L,

6L, 7L, 1L, 2L, 3L, 4L, 6L, 7L, 1L, 2L, 3L, 4L, 5L, 6L, 7L, 1L,

2L, 3L, 4L, 5L, 6L, 7L, 1L, 2L, 3L, 4L, 5L, 6L, 7L, 1L), Promo = c(0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0)), row.names = c(NA,

-143L), class = "data.frame")Recent Post

Excel Histograms

- 31 August 2025

- 3 min read

Combine Data in Excel with Power Query

- 31 July 2025

- 6 min read

Excel Radar Charts for Demographics

- 28 June 2025

- 3 min read

Writing Unstructured Data with Sink in R

- 25 May 2025

- 4 min read

Sales Lift Estimation with tools4uplift

- 30 April 2025

- 11 min read